Photo by Neenu Vimalkumar on Unsplash

Azure Functions - Developing Solutions for Microsoft Azure - Part 1.3

Table of contents

- 1. Develop Azure Compute Solutions

- 1.3 Implement Azure Functions

- Azure Functions

- Compare Azure Functions hosting options

- Scale Azure Functions

- Azure Functions development

- Create triggers and bindings

- Azure Functions binding expression patterns

- Connect functions to Azure services

- Local settings file

- Azure Queue storage trigger and bindings for Azure Functions

- Azure Functions Core Tools

- PRACTICAL 06: Create an Azure Function by using Visual Studio Code

- Custom Handlers

- Durable Functions app patterns

- Types of Functions

- Task hubs in Durable Functions

- Explore durable orchestrations

- Control timing in Durable Functions

- Send and wait for events

All the notes on this page have been taken from Microsoft's learning portal - learn.microsoft.com. If any of this material is copyrighted, please let me know in the comments below and I will remove it. The main goal here is to learn and understand the concepts for developing solutions for Microsoft Azure. These notes may be helpful to prepare for the certification AZ-204: Developing Solutions for Microsoft Azure

Continuing from Azure app service - Developing Solutions for Microsoft Azure - Part 1.2

1. Develop Azure Compute Solutions

1.3 Implement Azure Functions

Azure Functions

Azure Functions lets you develop serverless applications on Microsoft Azure. You can write just the code you need for the problem at hand, without worrying about implementing a whole application or setting up the infrastructure to run it.

Consider Functions for tasks like image or order processing, file maintenance, or for any tasks that you want to run on a schedule. Functions provides templates to get you started with key scenarios.

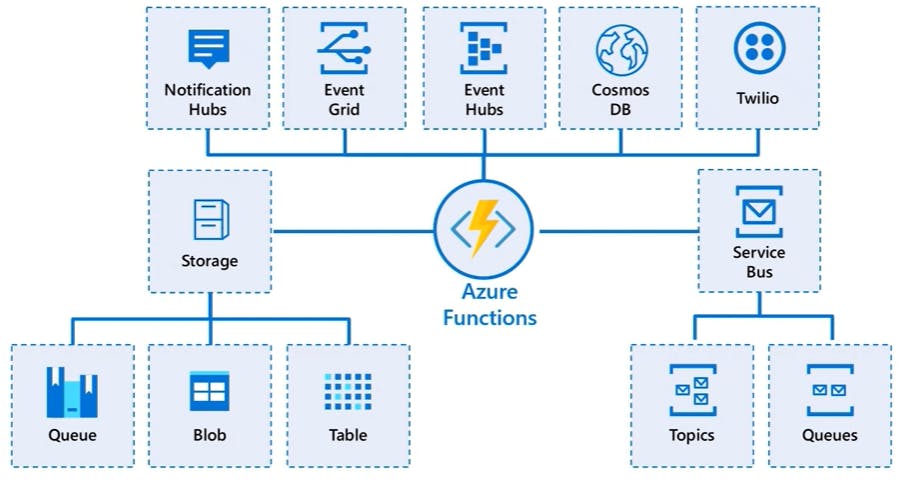

Azure Functions supports triggers, which are ways to start execution of your code, and bindings, which are ways to simplify coding for input and output data.

Compare Azure Functions and Azure Logic Apps

Both Functions and Logic Apps enable serverless workloads. Azure Functions is a serverless compute service, whereas Azure Logic Apps provides serverless workflows. Both can create complex orchestrations. An orchestration is a collection of functions or steps, called actions in Logic Apps, that are executed to accomplish a complex task.

For Azure Functions, you develop orchestrations by writing code and using the Durable Functions extension. For Logic Apps, you create orchestrations by using a GUI or editing configuration files.

You can mix and match services when you build an orchestration, calling functions from logic apps and calling logic apps from functions. The following table lists some of the key differences between these:

| Azure Functions | Logic Apps | |

| Development | Code-first (imperative) | Designer-first (declarative) |

| Connectivity | About a dozen built-in binding types, write code for custom bindings | Large collection of connectors, Enterprise Integration Pack for B2B scenarios, build custom connectors |

| Actions | Each activity is an Azure function; write code for activity functions | Large collection of ready-made actions |

| Monitoring | Azure Application Insights | Azure portal, Azure Monitor logs |

| Management | REST API, Visual Studio | Azure portal, REST API, PowerShell, Visual Studio |

| Execution context | Can run locally or in the cloud | Supports run-anywhere scenarios |

Compare Functions and WebJobs

Like Azure Functions, Azure App Service WebJobs with the WebJobs SDK is a code-first integration service that is designed for developers. Both are built on Azure App Service and support features such as source control integration, authentication, and monitoring with Application Insights integration.

Azure Functions is built on the WebJobs SDK, so it shares many of the same event triggers and connections to other Azure services. Here are some factors to consider when you're choosing between Azure Functions and WebJobs with the WebJobs SDK:

| Functions | WebJobs with WebJobs SDK | |

| Serverless app model with automatic scaling | Yes | No |

| Develop and test in browser | Yes | No |

| Pay-per-use pricing | Yes | No |

| Integration with Logic Apps | Yes | No |

| Trigger events | Timer | |

| Azure Storage queues and blobs | ||

| Azure Service Bus queues and topics | ||

| Azure Cosmos DB | ||

| Azure Event Hubs | ||

| HTTP/WebHook (GitHub | ||

| Slack) | ||

| Azure Event Grid | Timer | |

| Azure Storage queues and blobs | ||

| Azure Service Bus queues and topics | ||

| Azure Cosmos DB | ||

| Azure Event Hubs | ||

| File system |

Azure Functions offers more developer productivity than Azure App Service WebJobs does. It also offers more options for programming languages, development environments, Azure service integration, and pricing. For most scenarios, it's the best choice.

Compare Azure Functions hosting options

There are three basic hosting plans available for Azure Functions: Consumption plan, Functions Premium plan, and App service (Dedicated) plan. All hosting plans are generally available (GA) on both Linux and Windows virtual machines.

The hosting plan you choose dictates the following behaviors:

How your function app is scaled.

The resources available to each function app instance.

Support for advanced functionality, such as Azure Virtual Network connectivity.

The following is a summary of the benefits of the three main hosting plans for Functions:

| Plan | Benefits |

| Consumption plan | This is the default hosting plan. It scales automatically and you only pay for compute resources when your functions are running. Instances of the Functions host are dynamically added and removed based on the number of incoming events. |

| Premium plan | Automatically scales based on demand using pre-warmed workers, which run applications with no delay after being idle, runs on more powerful instances, and connects to virtual networks. |

| Dedicated plan | Run your functions within an App Service plan at regular App Service plan rates. Best for long-running scenarios where Durable Functions can't be used. |

There are two other hosting options, which provide the highest amount of control and isolation in which to run your function apps.

| Hosting option | Details |

| ASE | App Service Environment (ASE) is an App Service feature that provides a fully isolated and dedicated environment for securely running App Service apps at high scale. |

| Kubernetes | Kubernetes provides a fully isolated and dedicated environment running on top of the Kubernetes platform. For more information visit Azure Functions on Kubernetes with KEDA. |

Always on

If you run on a Dedicated plan, you should enable the Always on setting so that your function app runs correctly. On an App Service plan, the functions runtime goes idle after a few minutes of inactivity, so only HTTP triggers will "wake up" your functions. Always on is available only on an App Service plan. On a Consumption plan, the platform activates function apps automatically.

Storage account requirements

On any plan, a function app requires a general Azure Storage account, which supports Azure Blob, Queue, Files, and Table storage. This is because Functions rely on Azure Storage for operations such as managing triggers and logging function executions.

The same storage account used by your function app can also be used by your triggers and bindings to store your application data. However, for storage-intensive operations, you should use a separate storage account.

Scale Azure Functions

In the Consumption and Premium plans, Azure Functions scales CPU and memory resources by adding additional instances of the Functions host. The number of instances is determined by the number of events that trigger a function. Each instance of the Functions host in the Consumption plan is limited to 1.5 GB of memory and one CPU.

Function code files are stored on Azure Files shares on the function's main storage account. When you delete the main storage account of the function app, the function code files are deleted and cannot be recovered.

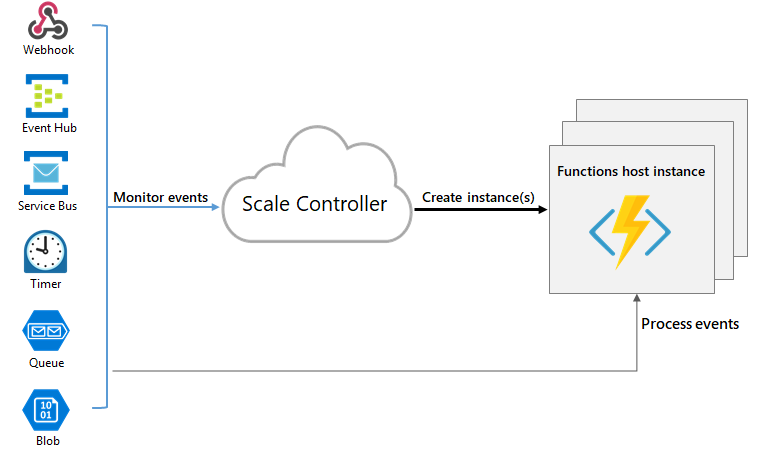

Runtime scaling

Azure Functions uses a component called the scale controller to monitor the rate of events and determine whether to scale out or scale in. The scale controller uses heuristics for each trigger type. For example, when you're using an Azure Queue storage trigger, it scales based on the queue length and the age of the oldest queue message.

The unit of scale for Azure Functions is the function app. When the function app is scaled out, additional resources are allocated to run multiple instances of the Azure Functions host. Conversely, as compute demand is reduced, the scale controller removes function host instances. The number of instances is eventually "scaled in" to zero when no functions are running within a function app.

Note: After your function app has been idle for a number of minutes, the platform may scale the number of instances on which your app runs in to zero. The next request has the added latency of scaling from zero to one. This latency is referred to as a cold start.

Scaling behaviors

There are a few intricacies of scaling behaviors to be aware of:

Maximum instances: A single function app only scales out to a maximum of 200 instances. A single instance may process more than one message or request at a time though, so there isn't a set limit on number of concurrent executions.

New instance rate: For HTTP triggers, new instances are allocated, at most, once per second. For non-HTTP triggers, new instances are allocated, at most, once every 30 seconds.

Limit scale out

You may wish to restrict the maximum number of instances an app used to scale out. This is most common for cases where a downstream component like a database has limited throughput. By default, Consumption plan functions scale out to as many as 200 instances, and Premium plan functions will scale out to as many as 100 instances. You can specify a lower maximum for a specific app by modifying the functionAppScaleLimit value. The functionAppScaleLimit can be set to 0 or null for unrestricted, or a valid value between 1 and the app maximum.

Azure Functions scaling in an App service plan

Using an App Service plan, you can manually scale out by adding more VM instances. You can also enable autoscale, though autoscale will be slower than the elastic scale of the Premium plan.

Azure Functions development

A function contains two important pieces - your code, and config in the function.json file. For compiled languages, this config file is generated automatically from annotations in your code. For scripting languages, you must provide the config file yourself.

The function.json file defines the function's trigger, bindings, and other configuration settings. Every function has one and only one trigger. The runtime uses this config file to determine the events to monitor and how to pass data into and return data from a function execution. The following is an example function.json file.

{

"disabled":false,

"bindings":[

// ... bindings here

{

"type": "bindingType",

"direction": "in",

"name": "myParamName",

// ... more depending on binding

}

]

}

The bindings property is where you configure both triggers and bindings. Every binding requires the following settings:

| Property | Types | Comments |

type | string | Name of binding. For example, queueTrigger. |

direction | string | Indicates whether the binding is for receiving data into the function or sending data from the function. For example, in or out. |

name | string | The name that is used for the bound data in the function. For example, myQueue. |

Function app

A function app provides an execution context in Azure in which your functions run. As such, it is the unit of deployment and management for your functions. A function app is composed of one or more individual functions that are managed, deployed, and scaled together. All of the functions in a function app share the same pricing plan, deployment method, and runtime version. Think of a function app as a way to organize and collectively manage your functions.

Note: In Functions 2.x all functions in a function app must be authored in the same language. In previous versions of the Azure Functions runtime, this wasn't required.

Folder structure

The code for all the functions in a specific function app is located in a root project folder that contains a host configuration file. The host.json file contains runtime-specific configurations and is in the root folder of the function app. A bin folder contains packages and other library files that the function app requires. Specific folder structures required by the function app depend on language:

Local development environments

The way in which you develop functions on your local computer depends on your language and tooling preferences. See Code and test Azure Functions locally for more information.

Note: Do not mix local development with portal development in the same function app. When you create and publish functions from a local project, you should not try to maintain or modify project code in the portal.

Create triggers and bindings

Overview

Triggers are what cause a function to run. A trigger defines how a function is invoked and a function must have exactly one trigger.

Binding to a function is a way of declaratively connecting another resource to the function; bindings may be connected as input bindings, output bindings, or both.

You can mix and match different bindings to suit your needs.

Triggers and bindings let you avoid hardcoding access to other services.

Trigger and binding definitions

Triggers and bindings are defined differently depending on the development language.

| Language | Triggers and bindings are configured by... |

| C# class library | decorating methods and parameters with C# attributes |

| Java | decorating methods and parameters with Java annotations |

| JavaScript/PowerShell/Python/TypeScript | updating function.json schema |

For languages that rely on function.json, the portal provides a UI for adding bindings in the Integration tab.

In .NET and Java, the parameter type defines the data type for input data. For instance, use string to bind to the text of a queue trigger, a byte array to read as binary, and a custom type to de-serialize to an object. Since .NET class library functions and Java functions don't rely on function.json for binding definitions, they can't be created and edited in the portal. C# portal editing is based on C# script, which uses function.json instead of attributes.

For languages that are dynamically typed such as JavaScript, use the dataType property in the function.json file. For example, to read the content of an HTTP request in binary format, set dataType to binary:

{

"dataType": "binary",

"type": "httpTrigger",

"name": "req",

"direction": "in"

}

Other options for dataType are stream and string.

Binding direction

All triggers and bindings have a direction property in the function.json file:

- For triggers, the direction is always in

- Input and output bindings use in and out

- Some bindings support a special direction input. If you use inout, only the Advanced editor is available via the Integrate tab in the portal.

- When you use attributes in a class library to configure triggers and bindings, the direction is provided in an attribute constructor or inferred from the parameter type.

Azure Functions trigger and binding example

Example 1

You want to write a new document to CosmosDB whenever a new message appears in Azure Queue storage

"bindings":[

{

"name": "order",

"type": "queueTrigger",

"direction": "in",

//other properties

},

{

"name": "doc",

"type": "cosmosDB",

"direction": "out",

//other properties

},

{

"name": "$return",

"type": "http",

"direction": "out"

}

]

Example 2

Suppose you want to write a new row to Azure Table storage whenever a new message appears in Azure Queue storage. This scenario can be implemented using an Azure Queue storage trigger and an Azure Table storage output binding.

Here's a function.json file for this scenario.

{

"bindings": [

{

"type": "queueTrigger",

"direction": "in",

"name": "order",

"queueName": "myqueue-items",

"connection": "MY_STORAGE_ACCT_APP_SETTING"

},

{

"type": "table",

"direction": "out",

"name": "$return",

"tableName": "outTable",

"connection": "MY_TABLE_STORAGE_ACCT_APP_SETTING"

}

]

}

The first element in the bindings array is the Queue storage trigger. The type and direction properties identify the trigger. The name property identifies the function parameter that receives the queue message content. The name of the queue to monitor is in queueName, and the connection string is in the app setting identified by connection.

The second element in the bindings array is the Azure Table Storage output binding. The type and direction properties identify the binding. The name property specifies how the function provides the new table row, in this case by using the function return value. The name of the table is in tableName, and the connection string is in the app setting identified by connection.

Example 3

Suppose once every hour, you want to read new log files generated by your application, and store the logs into CosmosDB.

You can use the trigger type Timer because you want to run your function as a scheduled job that will run at a specific time. The bindings will be In for blob storage and Out for cosmos DB because whenever a function runs, it'll be on a timer, and when it executes, it will read data from blob storage, process the data, and then write some data into the cosmos DB.

Example 4

Suppose that every time a new user signs up to you application you want to trigger a welcome email.

You will first develop an API that allows you to send an email after a request is received. You will then use HTTP trigger because it's an API that will be triggered based on this request. For the bindings, you won't be accessing any data when the function starts so the In direction is None and you will use SendGrid for the Out direction which allows you to send messages via email.

C# script example

Here's C# script code that works with this trigger and binding. Notice that the name of the parameter that provides the queue message content is order; this name is required because the name property value in function.json is order.

#r "Newtonsoft.Json"

using Microsoft.Extensions.Logging;

using Newtonsoft.Json.Linq;

// From an incoming queue message that is a JSON object, add fields and write to Table storage

// The method return value creates a new row in Table Storage

public static Person Run(JObject order, ILogger log)

{

return new Person() {

PartitionKey = "Orders",

RowKey = Guid.NewGuid().ToString(),

Name = order["Name"].ToString(),

MobileNumber = order["MobileNumber"].ToString() };

}

public class Person

{

public string PartitionKey { get; set; }

public string RowKey { get; set; }

public string Name { get; set; }

public string MobileNumber { get; set; }

}

JavaScript example

The same function.json file can be used with a JavaScript function:

// From an incoming queue message that is a JSON object, add fields and write to Table Storage

module.exports = async function (context, order) {

order.PartitionKey = "Orders";

order.RowKey = generateRandomId();

context.bindings.order = order;

};

function generateRandomId() {

return Math.random().toString(36).substring(2, 15) +

Math.random().toString(36).substring(2, 15);

}

Class library example

In a class library, the same trigger and binding information — queue and table names, storage accounts, function parameters for input and output — is provided by attributes instead of a function.json file. Here's an example:

public static class QueueTriggerTableOutput

{

[FunctionName("QueueTriggerTableOutput")]

[return: Table("outTable", Connection = "MY_TABLE_STORAGE_ACCT_APP_SETTING")]

public static Person Run(

[QueueTrigger("myqueue-items", Connection = "MY_STORAGE_ACCT_APP_SETTING")]JObject order,

ILogger log)

{

return new Person() {

PartitionKey = "Orders",

RowKey = Guid.NewGuid().ToString(),

Name = order["Name"].ToString(),

MobileNumber = order["MobileNumber"].ToString() };

}

}

public class Person

{

public string PartitionKey { get; set; }

public string RowKey { get; set; }

public string Name { get; set; }

public string MobileNumber { get; set; }

}

Azure Functions binding expression patterns

One of the most powerful features of triggers and bindings is binding expressions. In the function.json file and in function parameters and code, you can use expressions that resolve to values from various sources.

Most expressions are identified by wrapping them in curly braces. For example, in a queue trigger function, {queueTrigger} resolves to the queue message text. If the path property for a blob output binding is container/{queueTrigger} and the function is triggered by a queue message HelloWorld, a blob named HelloWorld is created.

Types of binding expressions

Binding expressions - app settings

As a best practice, secrets and connection strings should be managed using app settings, rather than configuration files. This limits access to these secrets and makes it safe to store files such as function.json in public source control repositories.

App setting binding expressions are identified differently from other binding expressions: they are wrapped in percent signs rather than curly braces. For example if the blob output binding path is %Environment%/newblob.txt and the Environment app setting value is Development, a blob will be created in the Development container.

When a function is running locally, app setting values come from the local.settings.json file.

Note: The connection property of triggers and bindings is a special case and automatically resolves values as app settings, without percent signs.

The following example is an Azure Queue Storage trigger that uses an app setting %input_queue_name% to define the queue to trigger on.

{

"bindings": [

{

"name": "order",

"type": "queueTrigger",

"direction": "in",

"queueName": "%input_queue_name%",

"connection": "MY_STORAGE_ACCT_APP_SETTING"

}

]

}

You can use the same approach in class libraries:

[FunctionName("QueueTrigger")]

public static void Run(

[QueueTrigger("%input_queue_name%")]string myQueueItem,

ILogger log)

{

log.LogInformation($"C# Queue trigger function processed: {myQueueItem}");

}

Trigger file name

The path for a Blob trigger can be a pattern that lets you refer to the name of the triggering blob in other bindings and function code. The pattern can also include filtering criteria that specify which blobs can trigger a function invocation.

For example, in the following Blob trigger binding, the path pattern is sample-images/{filename}, which creates a binding expression named filename:

{

"bindings": [

{

"name": "image",

"type": "blobTrigger",

"path": "sample-images/{filename}",

"direction": "in",

"connection": "MyStorageConnection"

},

...

The expression filename can then be used in an output binding to specify the name of the blob being created:

...

{

"name": "imageSmall",

"type": "blob",

"path": "sample-images-sm/{filename}",

"direction": "out",

"connection": "MyStorageConnection"

}

],

}

Function code has access to this same value by using filename as a parameter name:

// C# example of binding to {filename}

public static void Run(Stream image, string filename, Stream imageSmall, ILogger log)

{

log.LogInformation($"Blob trigger processing: {filename}");

// ...

}

The same ability to use binding expressions and patterns applies to attributes in class libraries. In the following example, the attribute constructor parameters are the same path values as the preceding function.json examples:

[FunctionName("ResizeImage")]

public static void Run(

[BlobTrigger("sample-images/{filename}")] Stream image,

[Blob("sample-images-sm/{filename}", FileAccess.Write)] Stream imageSmall,

string filename,

ILogger log)

{

log.LogInformation($"Blob trigger processing: {filename}");

// ...

}

You can also create expressions for parts of the file name. In the following example, function is triggered only on file names that match a pattern: anyname-anyfile.csv

{

"name": "myBlob",

"type": "blobTrigger",

"direction": "in",

"path": "testContainerName/{date}-{filetype}.csv",

"connection": "OrderStorageConnection"

}

For more information on how to use expressions and patterns in the Blob path string, see the Storage blob binding reference.

Trigger metadata

In addition to the data payload provided by a trigger (such as the content of the queue message that triggered a function), many triggers provide additional metadata values. These values can be used as input parameters in C# or properties on the context.bindings object in JavaScript.

For example, an Azure Queue storage trigger supports the following properties:

QueueTrigger - triggering message content if a valid string

DequeueCount

ExpirationTime

Id

InsertionTime

NextVisibleTime

PopReceipt

These metadata values are accessible in function.json file properties. For example, suppose you use a queue trigger and the queue message contains the name of a blob you want to read. In the function.json file, you can use queueTrigger metadata property in the blob path property, as shown in the following example:

JSONCopy

{

"bindings": [

{

"name": "myQueueItem",

"type": "queueTrigger",

"queueName": "myqueue-items",

"connection": "MyStorageConnection",

},

{

"name": "myInputBlob",

"type": "blob",

"path": "samples-workitems/{queueTrigger}",

"direction": "in",

"connection": "MyStorageConnection"

}

]

}

JSON payloads

When a trigger payload is JSON, you can refer to its properties in configuration for other bindings in the same function and in function code.

The following example shows the function.json file for a webhook function that receives a blob name in JSON: {"BlobName":"HelloWorld.txt"}. A Blob input binding reads the blob, and the HTTP output binding returns the blob contents in the HTTP response. Notice that the Blob input binding gets the blob name by referring directly to the BlobName property ("path": "strings/{BlobName}")

{

"bindings": [

{

"name": "info",

"type": "httpTrigger",

"direction": "in",

"webHookType": "genericJson"

},

{

"name": "blobContents",

"type": "blob",

"direction": "in",

"path": "strings/{BlobName}",

"connection": "AzureWebJobsStorage"

},

{

"name": "res",

"type": "http",

"direction": "out"

}

]

}

For this to work in C#, you need a class that defines the fields to be deserialized, as in the following example:

using System.Net;

using Microsoft.Extensions.Logging;

public class BlobInfo

{

public string BlobName { get; set; }

}

public static HttpResponseMessage Run(HttpRequestMessage req, BlobInfo info, string blobContents, ILogger log)

{

if (blobContents == null) {

return req.CreateResponse(HttpStatusCode.NotFound);

}

log.LogInformation($"Processing: {info.BlobName}");

return req.CreateResponse(HttpStatusCode.OK, new {

data = $"{blobContents}"

});

}

In JavaScript, JSON deserialization is automatically performed.

module.exports = async function (context, info) {

if ('BlobName' in info) {

context.res = {

body: { 'data': context.bindings.blobContents }

}

}

else {

context.res = {

status: 404

};

}

}

Dot notation

If some of the properties in your JSON payload are objects with properties, you can refer to those directly by using dot (.) notation. This notation doesn't work for Azure Cosmos DB or Table storage bindings.

For example, suppose your JSON looks like this:

{

"BlobName": {

"FileName":"HelloWorld",

"Extension":"txt"

}

}

You can refer directly to FileName as BlobName.FileName. With this JSON format, here's what the path property in the preceding example would look like:

"path": "strings/{BlobName.FileName}.{BlobName.Extension}",

In C#, you would need two classes:

public class BlobInfo

{

public BlobName BlobName { get; set; }

}

public class BlobName

{

public string FileName { get; set; }

public string Extension { get; set; }

}

Create GUIDs

The {rand-guid} binding expression creates a GUID. The following blob path in a function.json file creates a blob with a name like 50710cb5-84b9-4d87-9d83-a03d6976a682.txt.

{

"type": "blob",

"name": "blobOutput",

"direction": "out",

"path": "my-output-container/{rand-guid}.txt"

}

Current time

The binding expression DateTime resolves to DateTime.UtcNow. The following blob path in a function.json file creates a blob with a name like 2018-02-16T17-59-55Z.txt.

{

"type": "blob",

"name": "blobOutput",

"direction": "out",

"path": "my-output-container/{DateTime}.txt"

}

Binding at runtime

In C# and other .NET languages, you can use an imperative binding pattern, as opposed to the declarative bindings in function.json and attributes. Imperative binding is useful when binding parameters need to be computed at runtime rather than design time.

Connect functions to Azure services

Your function references connection information by name from its configuration provider. It does not directly accept the connection details, allowing them to be changed across environments. For example, a trigger definition might include a connection property. This might refer to a connection string, but you cannot set the connection string directly in a function.json. Instead, you would set connection to the name of an environment variable that contains the connection string.

The default configuration provider uses environment variables. These might be set by Application Settings when running in the Azure Functions service, or from the local settings file when developing locally.

Connection values

When the connection name resolves to a single exact value, the runtime identifies the value as a connection string, which typically includes a secret. The details of a connection string are defined by the service to which you wish to connect.

However, a connection name can also refer to a collection of multiple configuration items. Environment variables can be treated as a collection by using a shared prefix that ends in double underscores __. The group can then be referenced by setting the connection name to this prefix.

For example, the connection property for an Azure Blob trigger definition might be Storage1. As long as there is no single string value configured with Storage1 as its name, Storage1__serviceUri would be used for the serviceUri property of the connection. The connection properties are different for each service.

Configure an identity-based connection

Some connections in Azure Functions are configured to use an identity instead of a secret. Support depends on the extension using the connection. In some cases, a connection string may still be required in Functions even though the service to which you are connecting supports identity-based connections.

Note: Identity-based connections are not supported with Durable Functions.

When hosted in the Azure Functions service, identity-based connections use a managed identity. The system-assigned identity is used by default, although a user-assigned identity can be specified with the credential and clientID properties. When run in other contexts, such as local development, your developer identity is used instead, although this can be customized using alternative connection parameters.

Grant permission to the identity

Whatever identity is being used must have permissions to perform the intended actions. This is typically done by assigning a role in Azure RBAC or specifying the identity in an access policy, depending on the service to which you are connecting.

Local settings file

The local.settings.json file stores app settings and settings used by local development tools. Settings in the local.settings.json file are used only when you're running your project locally. When you publish your project to Azure, be sure to also add any required settings to the app settings for the function app.

Important: Because the local.settings.json may contain secrets, such as connection strings, you should never store it in a remote repository. Tools that support Functions provide ways to synchronize settings in the local.settings.json file with the app settings in the function app to which your project is deployed.

The local settings file has this structure:

{

"IsEncrypted": false,

"Values": {

"FUNCTIONS_WORKER_RUNTIME": "<language worker>",

"AzureWebJobsStorage": "<connection-string>",

"MyBindingConnection": "<binding-connection-string>",

"AzureWebJobs.HttpExample.Disabled": "true"

},

"Host": {

"LocalHttpPort": 7071,

"CORS": "*",

"CORSCredentials": false

},

"ConnectionStrings": {

"SQLConnectionString": "<sqlclient-connection-string>"

}

}

These settings are supported when you run projects locally:

| Setting | Description |

IsEncrypted | When this setting is set to true, all values are encrypted with a local machine key. Used with func settings commands. Default value is false. You might want to encrypt the local.settings.json file on your local computer when it contains secrets, such as service connection strings. The host automatically decrypts settings when it runs. Use the func settings decrypt command before trying to read locally encrypted settings. |

Values | Collection of application settings used when a project is running locally. These key-value (string-string) pairs correspond to application settings in your function app in Azure, like AzureWebJobsStorage. Many triggers and bindings have a property that refers to a connection string app setting, like Connection for the Blob storage trigger. For these properties, you need an application setting defined in the Values array. See the subsequent table for a list of commonly used settings. |

Values must be strings and not JSON objects or arrays. Setting names can't include a double underline (__) and shouldn't include a colon (:). Double underline characters are reserved by the runtime, and the colon is reserved to support dependency injection. | |

Host | Settings in this section customize the Functions host process when you run projects locally. These settings are separate from the host.json settings, which also apply when you run projects in Azure. |

LocalHttpPort | Sets the default port used when running the local Functions host (func host start and func run). The --port command-line option takes precedence over this setting. For example, when running in Visual Studio IDE, you may change the port number by navigating to the "Project Properties -> Debug" window and explicitly specifying the port number in a host start --port <your-port-number> command that can be supplied in the "Application Arguments" field. |

CORS | Defines the origins allowed for cross-origin resource sharing (CORS). Origins are supplied as a comma-separated list with no spaces. The wildcard value (*) is supported, which allows requests from any origin. |

CORSCredentials | When set to true, allows withCredentials requests. |

ConnectionStrings | A collection. Don't use this collection for the connection strings used by your function bindings. This collection is used only by frameworks that typically get connection strings from the ConnectionStrings section of a configuration file, like Entity Framework. Connection strings in this object are added to the environment with the provider type of System.Data.SqlClient. Items in this collection aren't published to Azure with other app settings. You must explicitly add these values to the Connection strings collection of your function app settings. If you're creating a SqlConnection in your function code, you should store the connection string value with your other connections in Application Settings in the portal. |

The following application settings can be included in the Values array when running locally:

| Setting | Values | Description |

AzureWebJobsStorage | Storage account connection string, or | |

UseDevelopmentStorage=true | Contains the connection string for an Azure storage account. Required when using triggers other than HTTP. For more information, see the AzureWebJobsStorage reference. | |

When you have the Azurite Emulator installed locally and you set AzureWebJobsStorage to UseDevelopmentStorage=true, Core Tools uses the emulator. For more information, see Local storage emulator. | ||

AzureWebJobs.<FUNCTION_NAME>.Disabled | true | false |

FUNCTIONS_WORKER_RUNTIME | dotnet | |

dotnet-isolated | ||

node | ||

java | ||

powershell | ||

python | Indicates the targeted language of the Functions runtime. Required for version 2.x and higher of the Functions runtime. This setting is generated for your project by Core Tools. To learn more, see the FUNCTIONS_WORKER_RUNTIME reference. | |

FUNCTIONS_WORKER_RUNTIME_VERSION | ~7 | Indicates to use PowerShell 7 when running locally. If not set, then PowerShell Core 6 is used. This setting is only used when running locally. The PowerShell runtime version is determined by the powerShellVersion site configuration setting, when it runs in Azure, which can be set in the portal. |

Azure Queue storage trigger and bindings for Azure Functions

Azure Functions can run as new Azure Queue storage messages are created and can write queue messages within a function.

[FunctionName("ProcessOrders")]

public static void ProcessOrders([QueueTrigger("incoming-orders")]CloudQueueMessage queueItem, [Table("Orders")]ICollector<Order> tableBindings, TraceWriter log)

{

tableBindings.Add(JsonConvert.DeserializeObject<Order>(queueItem.AsString));

}

host.json settings

Settings in the host.json file apply to all functions in a function app instance.

{

"version": "2.0",

"extensions": {

"queues": {

"maxPollingInterval": "00:00:02",

"visibilityTimeout" : "00:00:30",

"batchSize": 16,

"maxDequeueCount": 5,

"newBatchThreshold": 8,

"messageEncoding": "base64"

}

}

}

| Property | Default | Description |

| maxPollingInterval | 00:01:00 | The maximum interval between queue polls. The minimum interval is 00:00:00.100 (100 ms). Intervals increment up to maxPollingInterval. The default value of maxPollingInterval is 00:01:00 (1 min). maxPollingInterval must not be less than 00:00:00.100 (100 ms). In Functions 2.x and later, the data type is a TimeSpan. In Functions 1.x, it is in milliseconds. |

| visibilityTimeout | 00:00:00 | The time interval between retries when processing of a message fails. |

| batchSize | 16 | The number of queue messages that the Functions runtime retrieves simultaneously and processes in parallel. When the number being processed gets down to the newBatchThreshold, the runtime gets another batch and starts processing those messages. So the maximum number of concurrent messages being processed per function is batchSize plus newBatchThreshold. This limit applies separately to each queue-triggered function. |

If you want to avoid parallel execution for messages received on one queue, you can set batchSize to 1. However, this setting eliminates concurrency as long as your function app runs only on a single virtual machine (VM). If the function app scales out to multiple VMs, each VM could run one instance of each queue-triggered function.

The maximum batchSize is 32. | | maxDequeueCount | 5 | The number of times to try processing a message before moving it to the poison queue. | | newBatchThreshold | N*batchSize/2 | Whenever the number of messages being processed concurrently gets down to this number, the runtime retrieves another batch.

N represents the number of vCPUs available when running on App Service or Premium Plans. Its value is 1 for the Consumption Plan. | | messageEncoding | base64 | This setting is only available in extension bundle version 5.0.0 and higher. It represents the encoding format for messages. Valid values are base64 and none. |

Azure Functions Core Tools

Azure Functions Core Tools lets you develop and test your functions on your local computer from the command prompt or terminal.

Top Level Commands

func init: creates a new Functions project in a specific language

func logs: gets logs for functions running in a Kubernetes cluster.

func new: creates a new function in the current project based on a template

func run: enables you to invoke a function directly, which is similar to running a function using the Test tab in the Azure portal (this is only available in version 1.x)

func start: Starts the local runtime host and loads the function project in the current folder.

func deploy: replaced with func Kubernetes deploy

Command groups

These contain their own set of subcommands

func azure: working with Azure resources, including publishing

func durable: working with Durable functions

func extensions: for installing and managing extensions

func kubernetes: for working with Kubernetes and Azure functions

func settings: for managing env setttings for the local Functions host

func templates: for listing available function templates

PRACTICAL 06: Create an Azure Function by using Visual Studio Code

Prerequisites

Before you begin make sure you have the following requirements in place:

An Azure account with an active subscription. If you don't already have one, you can sign up for a free trial at https://azure.com/free.

The Azure Functions Core Tools version 4.x.

Visual Studio Code on one of the supported platforms.

.NET 6 is the target framework for the steps below.

The C# extension for Visual Studio Code.

The Azure Functions extension for Visual Studio Code.

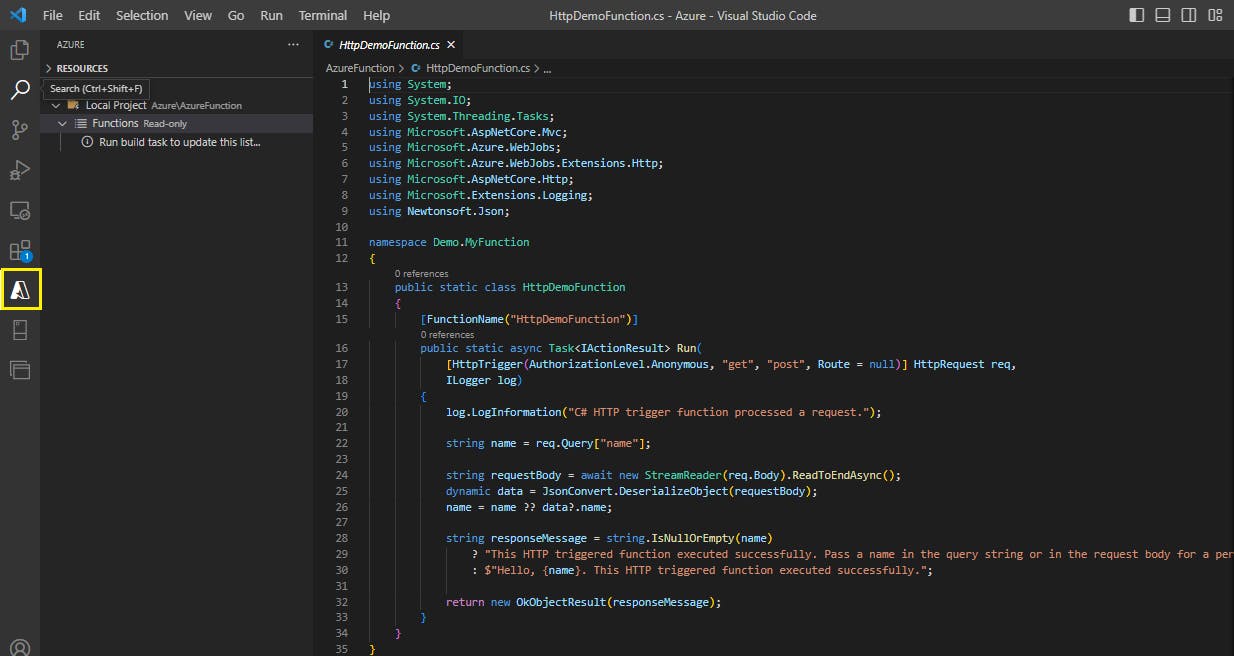

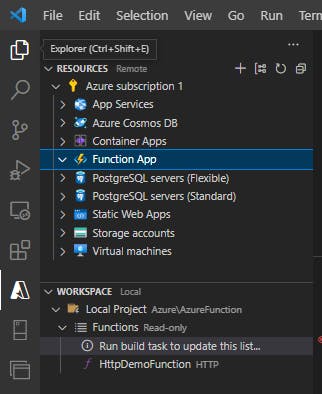

Step 1: Choose the Azure icon in the Activity bar, then in the Workspace area, select Add.... Finally, select Create Function....

A pop-up message will likely appear prompting you to create a new project, if it does select Create new project.

Step 2: Choose a directory location for your project workspace and choose Select.

Be sure to select a project folder that is outside of an existing workspace.

Step 3: Provide the following information at the prompts:

Select a language: Choose

C#.Select a .NET runtime: Choose

.NET 6Select a template for your project's first function: Choose

HTTP trigger.Provide a function name: Type

HttpDemoFunctionProvide a namespace: Type

Demo.MyFunctionAuthorization level: Choose

Anonymous, which enables anyone to call your function endpoint.Select how you would like to open your project: Choose

Add to workspace.

Using this information, Visual Studio Code generates an Azure Functions project with an HTTP trigger.

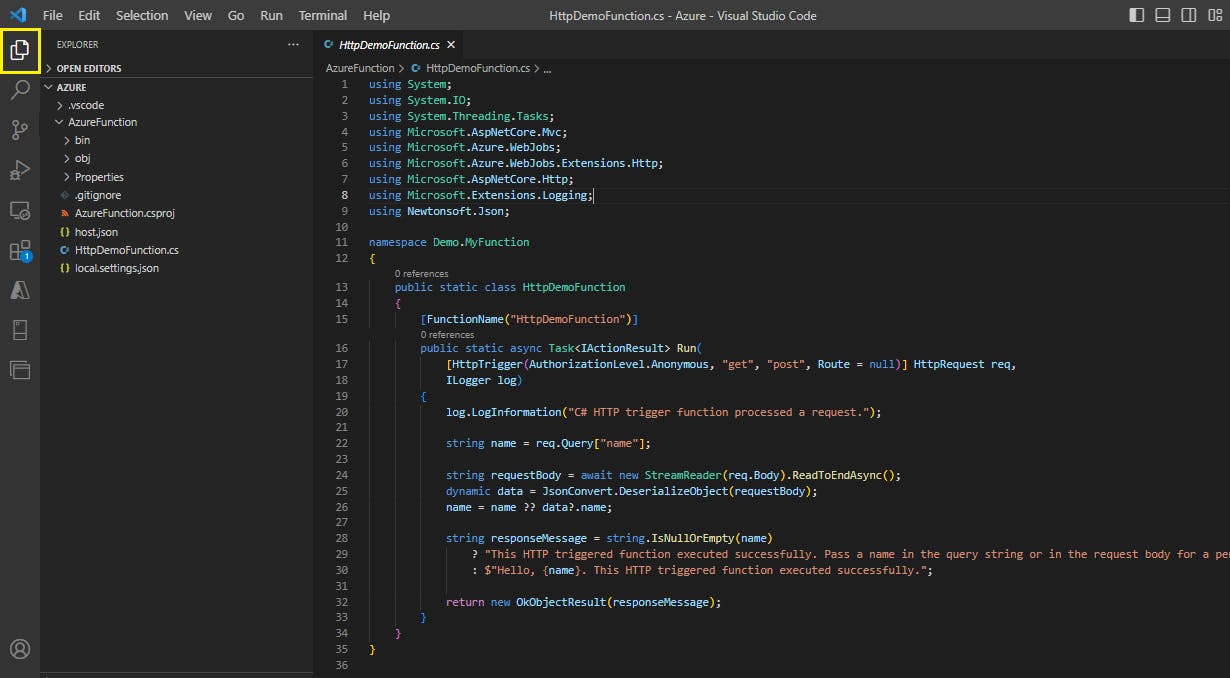

If you open the explorer, you will see the full project structure of this function

The code in the cs file is as follows:

using System;

using System.IO;

using System.Threading.Tasks;

using Microsoft.AspNetCore.Mvc;

using Microsoft.Azure.WebJobs;

using Microsoft.Azure.WebJobs.Extensions.Http;

using Microsoft.AspNetCore.Http;

using Microsoft.Extensions.Logging;

using Newtonsoft.Json;

namespace Demo.MyFunction

{

public static class HttpDemoFunction

{

[FunctionName("HttpDemoFunction")]

public static async Task<IActionResult> Run(

[HttpTrigger(AuthorizationLevel.Anonymous, "get", "post", Route = null)] HttpRequest req,

ILogger log)

{

log.LogInformation("C# HTTP trigger function processed a request.");

string name = req.Query["name"];

string requestBody = await new StreamReader(req.Body).ReadToEndAsync();

dynamic data = JsonConvert.DeserializeObject(requestBody);

name = name ?? data?.name;

string responseMessage = string.IsNullOrEmpty(name)

? "This HTTP triggered function executed successfully. Pass a name in the query string or in the request body for a personalized response."

: $"Hello, {name}. This HTTP triggered function executed successfully.";

return new OkObjectResult(responseMessage);

}

}

}

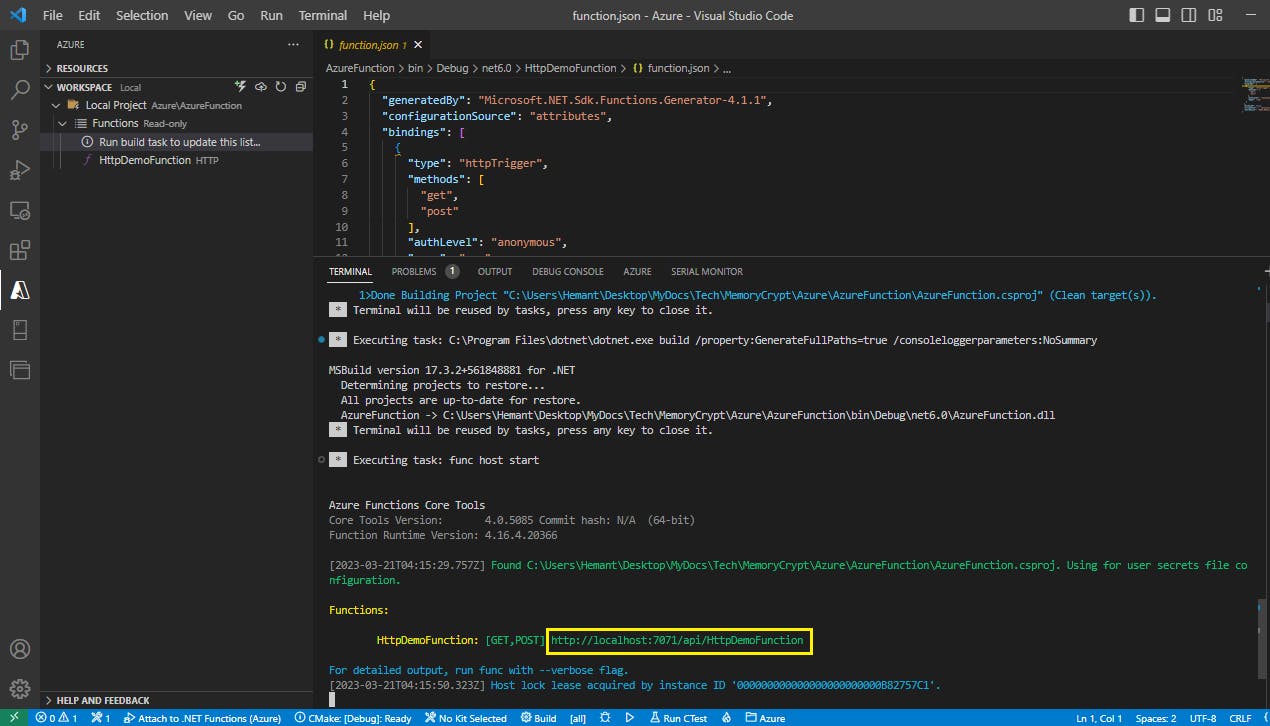

Step 4: Run the function locally

Press F5 to start the function app project in the debugger. Output from Core Tools is displayed in the Terminal panel. Your app starts in the Terminal panel. You can see the URL endpoint of your HTTP-triggered function running locally.

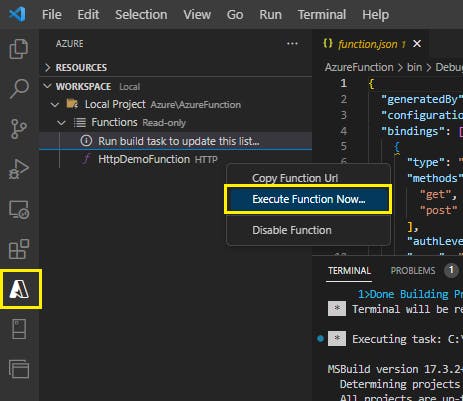

Step 5: Execute function locally

With Core Tools running, go to the Azure: Functions area. Under Functions, expand Local Project > Functions. Right-click the HttpExample function and choose Execute Function Now....

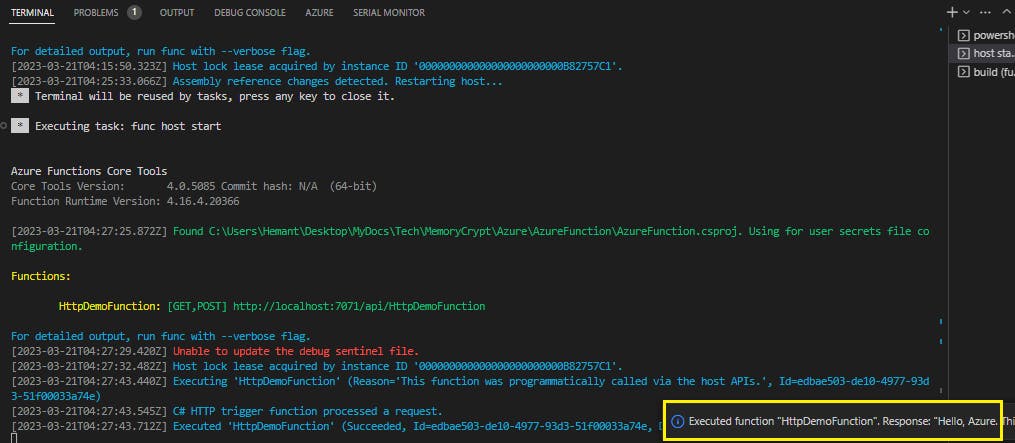

In Enter request body type the request message body value of { "name": "Azure" }.

Press Enter to send this request message to your function. When the function executes locally and returns a response, a notification is raised in Visual Studio Code. Information about the function execution is shown in Terminal panel.

Press Shift + F5 to stop Core Tools and disconnect the debugger.

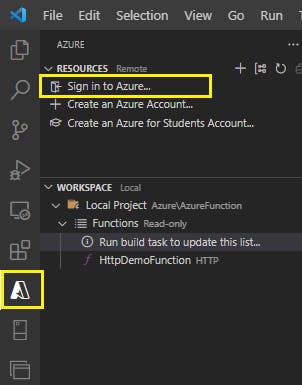

Step 6: Sign in to Azure

Choose the Azure icon in the Activity bar, then in the Azure: Functions area, choose Sign in to Azure....

When prompted in the browser, choose your Azure account and sign in using your Azure account credentials. After you've successfully signed in, you can close the new browser window. The subscriptions that belong to your Azure account are displayed in the Side bar.

Step 7: Create resources in Azure

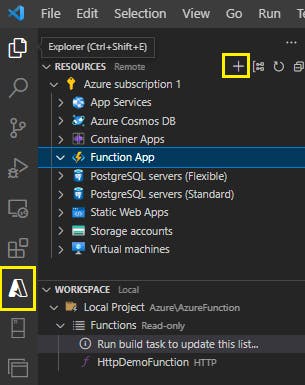

Choose the Azure icon in the Activity bar, then in the Resources area select the Create resource... button.

Provide the following information at the prompts:

Select Create Function App in Azure...

Enter a globally unique name for the function app: Type a name that is valid in a URL path. The name you type is validated to make sure that it's unique in Azure Functions.

Select a runtime stack: Use the same choice you made in the Create your local project section above.

Select a location for new resources: For better performance, choose a region near you.

Select subscription: Choose the subscription to use. You won't see this if you only have one subscription.

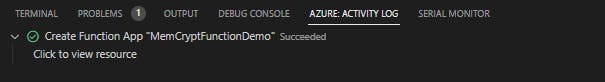

The extension shows the status of individual resources as they are being created in Azure in the AZURE: ACTIVITY LOG area of the terminal window.

When completed, the following Azure resources are created in your subscription, using names based on your function app name:

A resource group, which is a logical container for related resources.

A standard Azure Storage account, which maintains state and other information about your projects.

A consumption plan, which defines the underlying host for your serverless function app.

A function app, which provides the environment for executing your function code. A function app lets you group functions as a logical unit for easier management, deployment, and sharing of resources within the same hosting plan.

An Application Insights instance connected to the function app, which tracks usage of your serverless function.

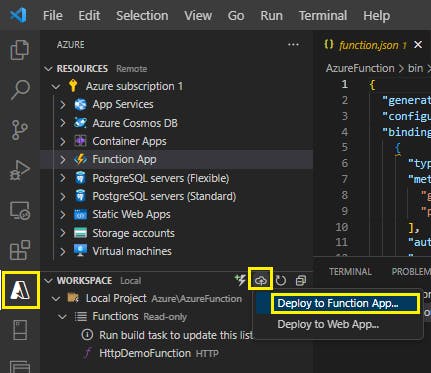

Step 8: Deploy the code to Azure

In the WORKSPACE section of the Azure bar select the Deploy... button, and then select Deploy to Function App....

When prompted to Select a resource, choose the function app you created in the previous section.

Confirm that you want to deploy your function by selecting Deploy on the confirmation prompt.

Important: Publishing to an existing function overwrites any previous deployments.

Step 9: Run the function in Azure

Back in the Resources area in the side bar, expand your subscription, your new function app, and Functions. Right-click the HttpExample function and choose Execute Function Now....

In Enter request body you see the request message body value of { "name": "Azure" }. Press Enter to send this request message to your function.

When the function executes in Azure and returns a response, a notification is raised in Visual Studio Code.

Custom Handlers

Custom handlers are lightweight web servers that receive events from the Functions host. Any language that supports HTTP primitives can implement a custom handler.

Custom handlers are best suited for situations where you want to:

Implement a function app in a language that's not currently offered out-of-the box, such as Go or Rust.

Implement a function app in a runtime that's not currently featured by default, such as Deno.

With custom handlers, you can use triggers and input and output bindings via extension bundles.

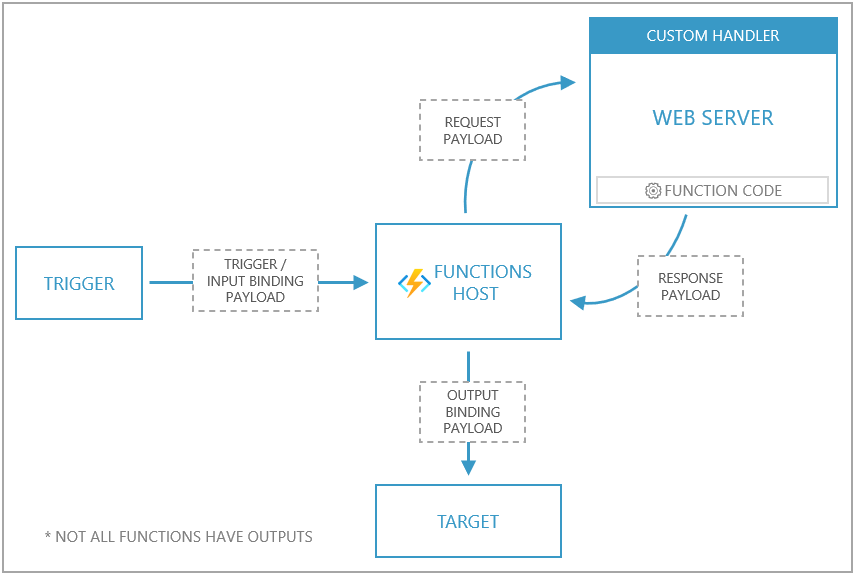

Overview

The following diagram shows the relationship between the Functions host and a web server implemented as a custom handler.

Each event triggers a request sent to the Functions host. An event is any trigger that is supported by Azure Functions.

The Functions host then issues a request payload to the web server. The payload holds trigger and input binding data and other metadata for the function.

The web server executes the individual function, and returns a response payload to the Functions host.

The Functions host passes data from the response to the function's output bindings for processing.

An Azure Functions app implemented as a custom handler must configure the host.json, local.settings.json, and function.json files according to a few conventions.

Application structure

To implement a custom handler, you need the following aspects to your application:

A host.json file at the root of your app

A local.settings.json file at the root of your app

A function.json file for each function (inside a folder that matches the function name)

A command, script, or executable, which runs a web server

The following diagram shows how these files look on the file system for a function named "MyQueueFunction" and a custom handler executable named handler.exe.

| /MyQueueFunction

| function.json

|

| host.json

| local.settings.json

| handler.exe

Configuration

The application is configured via the host.json and local.settings.json files.

host.json

host.json tells the Functions host where to send requests by pointing to a web server capable of processing HTTP events.

A custom handler is defined by configuring the host.json file with details on how to run the web server via the customHandler section.

{

"version": "2.0",

"customHandler": {

"description": {

"defaultExecutablePath": "handler.exe"

}

}

}

The customHandler section points to a target as defined by the defaultExecutablePath. The execution target may either be a command, executable, or file where the web server is implemented.

Use the arguments array to pass any arguments to the executable. Arguments support expansion of environment variables (application settings) using %% notation.

You can also change the working directory used by the executable with workingDirectory.

{

"version": "2.0",

"customHandler": {

"description": {

"defaultExecutablePath": "app/handler.exe",

"arguments": [

"--database-connection-string",

"%DATABASE_CONNECTION_STRING%"

],

"workingDirectory": "app"

}

}

}

Bindings Support

Standard triggers along with input and output bindings are available by referencing extension bundles in your host.json file.

local.settings.json

local.settings.json defines application settings used when running the function app locally. As it may contain secrets, local.settings.json should be excluded from source control. In Azure, use application settings instead.

For custom handlers, set FUNCTIONS_WORKER_RUNTIME to Custom in local.settings.json.

{

"IsEncrypted": false,

"Values": {

"FUNCTIONS_WORKER_RUNTIME": "Custom"

}

}

Function metadata

When used with a custom handler, the function.json contents are no different from how you would define a function under any other context. The only requirement is that function.json files must be in a folder named to match the function name.

The following function.json configures a function that has a queue trigger and a queue output binding. Because it's in a folder named MyQueueFunction, it defines a function named MyQueueFunction.

MyQueueFunction/function.json

{

"bindings": [

{

"name": "myQueueItem",

"type": "queueTrigger",

"direction": "in",

"queueName": "messages-incoming",

"connection": "AzureWebJobsStorage"

},

{

"name": "$return",

"type": "queue",

"direction": "out",

"queueName": "messages-outgoing",

"connection": "AzureWebJobsStorage"

}

]

}

Request payload

When a queue message is received, the Functions host sends an HTTP post request to the custom handler with a payload in the body.

The following code represents a sample request payload. The payload includes a JSON structure with two members: Data and Metadata.

The Data member includes keys that match input and trigger names as defined in the bindings array in the function.json file.

The Metadata member includes metadata generated from the event source.

{

"Data": {

"myQueueItem": "{ message: \"Message sent\" }"

},

"Metadata": {

"DequeueCount": 1,

"ExpirationTime": "2019-10-16T17:58:31+00:00",

"Id": "800ae4b3-bdd2-4c08-badd-f08e5a34b865",

"InsertionTime": "2019-10-09T17:58:31+00:00",

"NextVisibleTime": "2019-10-09T18:08:32+00:00",

"PopReceipt": "AgAAAAMAAAAAAAAAAgtnj8x+1QE=",

"sys": {

"MethodName": "QueueTrigger",

"UtcNow": "2019-10-09T17:58:32.2205399Z",

"RandGuid": "24ad4c06-24ad-4e5b-8294-3da9714877e9"

}

}

}

Response payload

By convention, function responses are formatted as key/value pairs. Supported keys include:

| Payload key | Data type | Remarks |

Outputs | object | Holds response values as defined by the bindings array in function.json. |

For instance, if a function is configured with a queue output binding named "myQueueOutput", then Outputs contains a key named myQueueOutput, which is set by the custom handler to the messages that are sent to the queue. | | Logs | array | Messages appear in the Functions invocation logs.

When running in Azure, messages appear in Application Insights. | | ReturnValue | string | Used to provide a response when an output is configured as $return in the function.json file. |

This is an example of a response payload.

{

"Outputs": {

"res": {

"body": "Message enqueued"

},

"myQueueOutput": [

"queue message 1",

"queue message 2"

]

},

"Logs": [

"Log message 1",

"Log message 2"

],

"ReturnValue": "{\"hello\":\"world\"}"

}

Examples

Custom handlers can be implemented in any language that supports receiving HTTP events. The following examples show how to implement a custom handler using the Go programming language.

Function with bindings

The scenario implemented in this example features a function named order that accepts a POST with a payload representing a product order. As an order is posted to the function, a Queue Storage message is created and an HTTP response is returned.

Implementation

In a folder named order, the function.json file configures the HTTP-triggered function.

order/function.json

{

"bindings": [

{

"type": "httpTrigger",

"direction": "in",

"name": "req",

"methods": ["post"]

},

{

"type": "http",

"direction": "out",

"name": "res"

},

{

"type": "queue",

"name": "message",

"direction": "out",

"queueName": "orders",

"connection": "AzureWebJobsStorage"

}

]

}

This function is defined as an HTTP triggered function that returns an HTTP response and outputs a Queue storage message.

At the root of the app, the host.json file is configured to run an executable file named handler.exe (handler in Linux or macOS).

{

"version": "2.0",

"customHandler": {

"description": {

"defaultExecutablePath": "handler.exe"

}

},

"extensionBundle": {

"id": "Microsoft.Azure.Functions.ExtensionBundle",

"version": "[1.*, 2.0.0)"

}

}

This is the HTTP request sent to the Functions runtime.

POST http://127.0.0.1:7071/api/order HTTP/1.1

Content-Type: application/json

{

"id": 1005,

"quantity": 2,

"color": "black"

}

The Functions runtime will then send the following HTTP request to the custom handler:

POST http://127.0.0.1:<FUNCTIONS_CUSTOMHANDLER_PORT>/order HTTP/1.1

Content-Type: application/json

{

"Data": {

"req": {

"Url": "http://localhost:7071/api/order",

"Method": "POST",

"Query": "{}",

"Headers": {

"Content-Type": [

"application/json"

]

},

"Params": {},

"Body": "{\"id\":1005,\"quantity\":2,\"color\":\"black\"}"

}

},

"Metadata": {

}

}

handler.exe is the compiled Go custom handler program that runs a web server and responds to function invocation requests from the Functions host.

package main

import (

"encoding/json"

"fmt"

"log"

"net/http"

"os"

)

type InvokeRequest struct {

Data map[string]json.RawMessage

Metadata map[string]interface{}

}

type InvokeResponse struct {

Outputs map[string]interface{}

Logs []string

ReturnValue interface{}

}

func orderHandler(w http.ResponseWriter, r *http.Request) {

var invokeRequest InvokeRequest

d := json.NewDecoder(r.Body)

d.Decode(&invokeRequest)

var reqData map[string]interface{}

json.Unmarshal(invokeRequest.Data["req"], &reqData)

outputs := make(map[string]interface{})

outputs["message"] = reqData["Body"]

resData := make(map[string]interface{})

resData["body"] = "Order enqueued"

outputs["res"] = resData

invokeResponse := InvokeResponse{outputs, nil, nil}

responseJson, _ := json.Marshal(invokeResponse)

w.Header().Set("Content-Type", "application/json")

w.Write(responseJson)

}

func main() {

customHandlerPort, exists := os.LookupEnv("FUNCTIONS_CUSTOMHANDLER_PORT")

if !exists {

customHandlerPort = "8080"

}

mux := http.NewServeMux()

mux.HandleFunc("/order", orderHandler)

fmt.Println("Go server Listening on: ", customHandlerPort)

log.Fatal(http.ListenAndServe(":"+customHandlerPort, mux))

}

In this example, the custom handler runs a web server to handle HTTP events and is set to listen for requests via the FUNCTIONS_CUSTOMHANDLER_PORT.

Even though the Functions host received original HTTP request at /api/order, it invokes the custom handler using the function name (its folder name). In this example, the function is defined at the path of /order. The host sends the custom handler an HTTP request at the path of /order.

As POST requests are sent to this function, the trigger data and function metadata are available via the HTTP request body. The original HTTP request body can be accessed in the payload's Data.req.Body.

The function's response is formatted into key/value pairs where the Outputs member holds a JSON value where the keys match the outputs as defined in the function.json file.

This is an example payload that this handler returns to the Functions host.

{

"Outputs": {

"message": "{\"id\":1005,\"quantity\":2,\"color\":\"black\"}",

"res": {

"body": "Order enqueued"

}

},

"Logs": null,

"ReturnValue": null

}

By setting the message output equal to the order data that came in from the request, the function outputs that order data to the configured queue. The Functions host also returns the HTTP response configured in res to the caller.

HTTP-only function

For HTTP-triggered functions with no additional bindings or outputs, you may want your handler to work directly with the HTTP request and response instead of the custom handler request and response payloads. This behavior can be configured in host.json using the enableForwardingHttpRequest setting.

Important: The primary purpose of the custom handlers feature is to enable languages and runtimes that do not currently have first-class support on Azure Functions. While it may be possible to run web applications using custom handlers, Azure Functions is not a standard reverse proxy. Some features such as response streaming, HTTP/2, and WebSockets are not available. Some components of the HTTP request such as certain headers and routes may be restricted. Your application may also experience excessive cold start.

To address these circumstances, consider running your web apps on Azure App Service.

The following example demonstrates how to configure an HTTP-triggered function with no additional bindings or outputs. The scenario implemented in this example features a function named hello that accepts a GET or POST .

Implementation

In a folder named hello, the function.json file configures the HTTP-triggered function.

hello/function.json

{

"bindings": [

{

"type": "httpTrigger",

"authLevel": "anonymous",

"direction": "in",

"name": "req",

"methods": ["get", "post"]

},

{

"type": "http",

"direction": "out",

"name": "res"

}

]

}

The function is configured to accept both GET and POST requests and the result value is provided via an argument named res.

At the root of the app, the host.json file is configured to run handler.exe and enableForwardingHttpRequest is set to true.

{

"version": "2.0",

"customHandler": {

"description": {

"defaultExecutablePath": "handler.exe"

},

"enableForwardingHttpRequest": true

}

}

When enableForwardingHttpRequest is true, the behavior of HTTP-only functions differs from the default custom handlers behavior in these ways:

The HTTP request does not contain the custom handlers request payload. Instead, the Functions host invokes the handler with a copy of the original HTTP request.

The Functions host invokes the handler with the same path as the original request including any query string parameters.

The Functions host returns a copy of the handler's HTTP response as the response to the original request.

The following is a POST request to the Functions host. The Functions host then sends a copy of the request to the custom handler at the same path.

POST http://127.0.0.1:7071/api/hello HTTP/1.1

Content-Type: application/json

{

"message": "Hello World!"

}

The file handler.go file implements a web server and HTTP function.

package main

import (

"fmt"

"io/ioutil"

"log"

"net/http"

"os"

)

func helloHandler(w http.ResponseWriter, r *http.Request) {

w.Header().Set("Content-Type", "application/json")

if r.Method == "GET" {

w.Write([]byte("hello world"))

} else {

body, _ := ioutil.ReadAll(r.Body)

w.Write(body)

}

}

func main() {

customHandlerPort, exists := os.LookupEnv("FUNCTIONS_CUSTOMHANDLER_PORT")

if !exists {

customHandlerPort = "8080"

}

mux := http.NewServeMux()

mux.HandleFunc("/api/hello", helloHandler)

fmt.Println("Go server Listening on: ", customHandlerPort)

log.Fatal(http.ListenAndServe(":"+customHandlerPort, mux))

}

In this example, the custom handler creates a web server to handle HTTP events and is set to listen for requests via the FUNCTIONS_CUSTOMHANDLER_PORT.

GET requests are handled by returning a string, and POST requests have access to the request body.

The route for the order function here is /api/hello, same as the original request.

Note: The FUNCTIONS_CUSTOMHANDLER_PORT is not the public facing port used to call the function. This port is used by the Functions host to call the custom handler.

Deploying

A custom handler can be deployed to every Azure Functions hosting option. If your handler requires operating system or platform dependencies (such as a language runtime), you may need to use a custom container.

When creating a function app in Azure for custom handlers, we recommend you select .NET Core as the stack. A "Custom" stack for custom handlers will be added in the future.

To deploy a custom handler app using Azure Functions Core Tools, run the following command.

func azure functionapp publish $functionAppName

Note: Ensure all files required to run your custom handler are in the folder and included in the deployment. If your custom handler is a binary executable or has platform-specific dependencies, ensure these files match the target deployment platform.

Restrictions

- The custom handler web server needs to start within 60 seconds.

Durable Functions app patterns

Durable Functions can simplify complex, stateful coordination requirements in serverless applications

Overview

The durable functions is an extension of Azure Functions that lets you define stateful workflows by writing orchestrator functions and stateful entities by writing entity functions using the Azure Functions programming model.

Behind the scenes, the extension manages state, checkpoints, and restarts for you, allowing you to focus on your business logic.

Supported languages for Durable Functions: C#, Python, Powershell, F#, Javascript

Application Patterns

The primary use case for Durable Functions is simplifying complex, stateful coordination requirements in serverless applications.

The following application patterns benefit from using Durable Functions

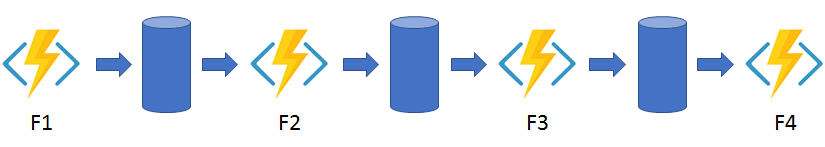

Function chaining

In the function chaining pattern, a sequence of functions executes in a specific order. In this pattern, the output of one function is applied to the input of another function.

In the code example below, the values F1, F2, F3, and F4 are the names of other functions in the function app. You can implement control flow by using normal imperative coding constructs. Code executes from the top down. The code can involve existing language control flow semantics, like conditionals and loops. You can include error handling logic in try/catch/finally blocks.

[FunctionName("Chaining")]

public static async Task<object> Run(

[OrchestrationTrigger] IDurableOrchestrationContext context)

{

try

{

var x = await context.CallActivityAsync<object>("F1", null);

var y = await context.CallActivityAsync<object>("F2", x);

var z = await context.CallActivityAsync<object>("F3", y);

return await context.CallActivityAsync<object>("F4", z);

}

catch (Exception)

{

// Error handling or compensation goes here.

}

}

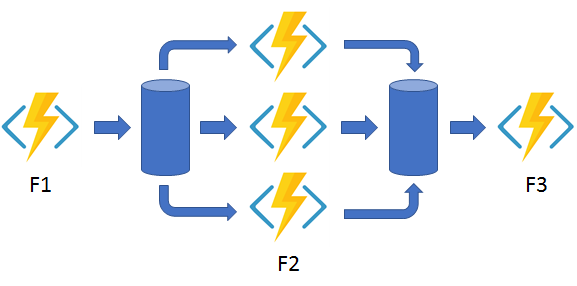

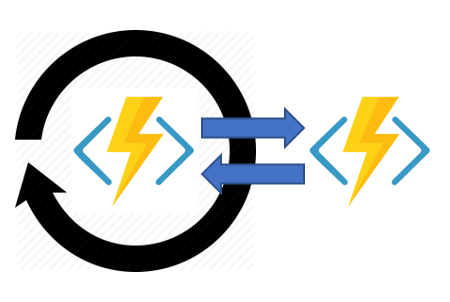

Fan-out/fan-in

In the fan out/fan in pattern, you execute multiple functions in parallel and then wait for all functions to finish. Often, some aggregation work is done on the results that are returned from the functions.

With normal functions, you can fan out by having the function send multiple messages to a queue. To fan in you write code to track when the queue-triggered functions end, and then store function outputs.

In the code example below, the fan-out work is distributed to multiple instances of the F2 function. The work is tracked by using a dynamic list of tasks. The .NET Task.WhenAll API or JavaScript context.df.Task.all API is called, to wait for all the called functions to finish. Then, the F2 function outputs are aggregated from the dynamic task list and passed to the F3 function.

[FunctionName("FanOutFanIn")]

public static async Task Run(

[OrchestrationTrigger] IDurableOrchestrationContext context)

{

var parallelTasks = new List<Task<int>>();

// Get a list of N work items to process in parallel.

object[] workBatch = await context.CallActivityAsync<object[]>("F1", null);

for (int i = 0; i < workBatch.Length; i++)

{

Task<int> task = context.CallActivityAsync<int>("F2", workBatch[i]);

parallelTasks.Add(task);

}

await Task.WhenAll(parallelTasks);

// Aggregate all N outputs and send the result to F3.

int sum = parallelTasks.Sum(t => t.Result);

await context.CallActivityAsync("F3", sum);

}

The automatic checkpointing that happens at the await or yield call on Task.WhenAll or context.df.Task.all ensures that a potential midway crash or reboot doesn't require restarting an already completed task.

Async HTTP APIs

The async HTTP API pattern addresses the problem of coordinating the state of long-running operations with external clients. A common way to implement this pattern is by having an HTTP endpoint trigger the long-running action. Then, redirect the client to a status endpoint that the client polls to learn when the operation is finished.

Durable Functions provides built-in support for this pattern, simplifying or even removing the code you need to write to interact with long-running function executions. After an instance starts, the extension exposes webhook HTTP APIs that query the orchestrator function status.

The following example shows REST commands that start an orchestrator and query its status. For clarity, some protocol details are omitted from the example.

> curl -X POST https://myfunc.azurewebsites.net/orchestrators/DoWork -H "Content-Length: 0" -i

HTTP/1.1 202 Accepted

Content-Type: application/json

Location: https://myfunc.azurewebsites.net/runtime/webhooks/durabletask/b79baf67f717453ca9e86c5da21e03ec

{"id":"b79baf67f717453ca9e86c5da21e03ec", ...}

> curl https://myfunc.azurewebsites.net/runtime/webhooks/durabletask/b79baf67f717453ca9e86c5da21e03ec -i

HTTP/1.1 202 Accepted

Content-Type: application/json

Location: https://myfunc.azurewebsites.net/runtime/webhooks/durabletask/b79baf67f717453ca9e86c5da21e03ec

{"runtimeStatus":"Running","lastUpdatedTime":"2019-03-16T21:20:47Z", ...}

> curl https://myfunc.azurewebsites.net/runtime/webhooks/durabletask/b79baf67f717453ca9e86c5da21e03ec -i

HTTP/1.1 200 OK

Content-Length: 175

Content-Type: application/json

{"runtimeStatus":"Completed","lastUpdatedTime":"2019-03-16T21:20:57Z", ...}

The Durable Functions extension exposes built-in HTTP APIs that manage long-running orchestrations. You can alternatively implement this pattern yourself by using your own function triggers (such as HTTP, a queue, or Azure Event Hubs) and the orchestration client binding.

You can use the HttpStart triggered function to start instances of an orchestrator function using a client function.

public static class HttpStart

{

[FunctionName("HttpStart")]

public static async Task<HttpResponseMessage> Run(

[HttpTrigger(AuthorizationLevel.Function, methods: "post", Route = "orchestrators/{functionName}")] HttpRequestMessage req,

[DurableClient] IDurableClient starter,

string functionName,

ILogger log)

{

// Function input comes from the request content.

object eventData = await req.Content.ReadAsAsync<object>();

string instanceId = await starter.StartNewAsync(functionName, eventData);

log.LogInformation($"Started orchestration with ID = '{instanceId}'.");

return starter.CreateCheckStatusResponse(req, instanceId);

}

}

To interact with orchestrators, the function must include a DurableClient input binding. You use the client to start an orchestration. It can also help you return an HTTP response containing URLs for checking the status of the new orchestration.

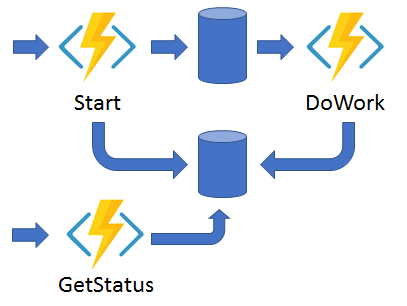

Monitor

The monitor pattern refers to a flexible, recurring process in a workflow. An example is polling until specific conditions are met. You can use a regular timer trigger to address a basic scenario, such as a periodic cleanup job, but its interval is static and managing instance lifetimes becomes complex. You can use Durable Functions to create flexible recurrence intervals, manage task lifetimes, and create multiple monitor processes from a single orchestration.

You can use Durable Functions to create multiple monitors that observe arbitrary endpoints. The monitors can end execution when a condition is met, or another function can use the durable orchestration client to terminate the monitors. You can change a monitor's wait interval based on a specific condition (for example, exponential backoff).

The following code implements a basic monitor:

[FunctionName("MonitorJobStatus")]

public static async Task Run(

[OrchestrationTrigger] IDurableOrchestrationContext context)

{

int jobId = context.GetInput<int>();

int pollingInterval = GetPollingInterval();

DateTime expiryTime = GetExpiryTime();

while (context.CurrentUtcDateTime < expiryTime)

{

var jobStatus = await context.CallActivityAsync<string>("GetJobStatus", jobId);

if (jobStatus == "Completed")

{

// Perform an action when a condition is met.

await context.CallActivityAsync("SendAlert", machineId);

break;

}

// Orchestration sleeps until this time.

var nextCheck = context.CurrentUtcDateTime.AddSeconds(pollingInterval);

await context.CreateTimer(nextCheck, CancellationToken.None);

}

// Perform more work here, or let the orchestration end.

}

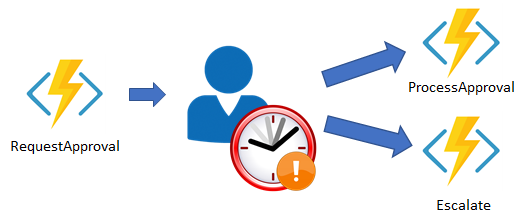

Human interaction

Many automated processes involve some kind of human interaction. Involving humans in an automated process is tricky because people aren't as highly available and as responsive as cloud services. An automated process might allow for this interaction by using timeouts and compensation logic.

You can implement the pattern in this example by using an orchestrator function. The orchestrator uses a durable timer to request approval. The orchestrator escalates if timeout occurs. The orchestrator waits for an external event, such as a notification that's generated by a human interaction.

[FunctionName("ApprovalWorkflow")]

public static async Task Run(

[OrchestrationTrigger] IDurableOrchestrationContext context)

{

await context.CallActivityAsync("RequestApproval", null);

using (var timeoutCts = new CancellationTokenSource())

{

DateTime dueTime = context.CurrentUtcDateTime.AddHours(72);

Task durableTimeout = context.CreateTimer(dueTime, timeoutCts.Token);

Task<bool> approvalEvent = context.WaitForExternalEvent<bool>("ApprovalEvent");

if (approvalEvent == await Task.WhenAny(approvalEvent, durableTimeout))

{

timeoutCts.Cancel();

await context.CallActivityAsync("ProcessApproval", approvalEvent.Result);

}

else

{

await context.CallActivityAsync("Escalate", null);

}

}

}

Types of Functions

There are currently four durable function types in Azure Functions: orchestrator, activity, entity, and client.

Orchestrator functions

Orchestrator functions describe how actions are executed and the order in which actions are executed. Orchestrator functions describe the orchestration in code (C# or JavaScript). An orchestration can have many different types of actions, including activity functions, suborchestrations, waiting for external events, HTTP, and timers. Orchestrator functions can also interact with entity functions.

There are strict requirements on how to write the code. Specifically, orchestrator function code must be deterministic. Failing to follow these determinism requirements can cause orchestrator functions to fail to run correctly.

Note: The Orchestrator function code constraints article has detailed information on this requirement.

Activity functions